Summary

Classical machine learning (CML) occupies nearly half of machine learning pipelines in production applications. Unfortunately, it fails to utilize the state-of-the-practice devices fully and performs poorly. State-of-the-practice and state-of-the-art CML frameworks provide ad-hoc solutions, implementing each CML model on every hardware device case by case due to the lack of unified abstractions. These ad-hoc solutions raise considerable difficulties in developing a general-purpose framework and optimization techniques to achieve optimal performance for every model. They either lack the support or only partially support various hardware devices, such as GPUs, FPGAs, and IoT devices. In addition, adding support for a model on a new hardware device needs great effort, more than several thousands of lines of codes, let alone hundreds or thousands of models and devices. Moreover, they also face performance issues. Even on the CPUs -- the most popular CML platform, the performance is unsatisfactory due to the lack of specific optimizations for advanced characteristics like multi-cores and SIMD. CML suffers from severe performance and portability issues.

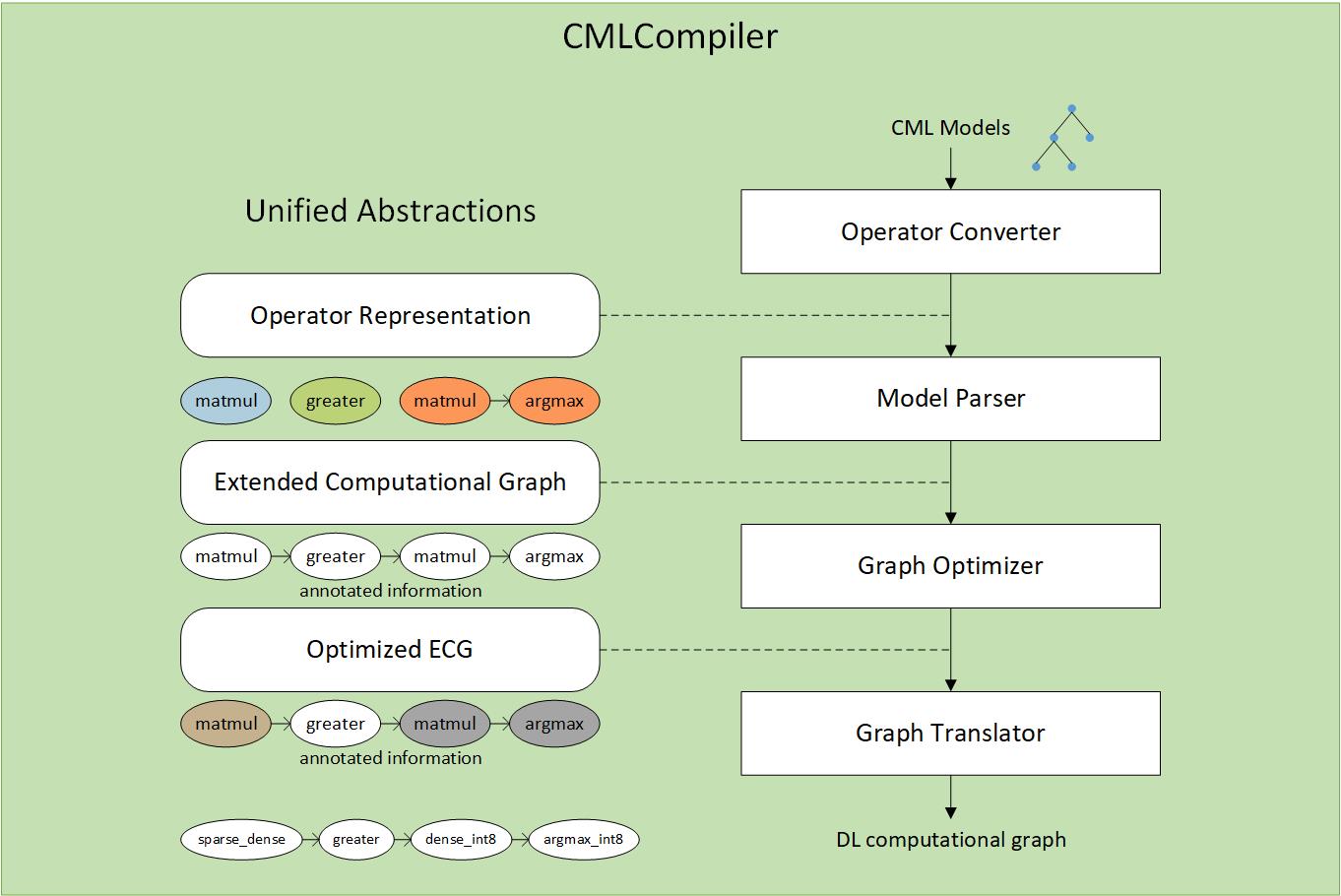

CMLCompiler aims to solve the performance and portabiltiy issue of CML models. At the core of CMLCompiler are two unified abstractions: operator representations and extended computational graphs (ECGs) and a compiler framework. Operator representations convert CML operators into tensor formats, while an ECG organizes these converted operators in an optimization-friendly way. The two unified abstractions define how to convert and translate CML models into Deep Learning (DL) computational graphs, which can be recognized and executed by DL frameworks and compilers. The CMLCompiler framework consists of four modules -- operator converter, model parser, graph optimizer, and graph translator. CMLCompiler is implemented on TVM and achieves obvious speedup compared to the state-of-the-art solutions.

The Unified Abstractions

At the core of CMLCompiler are two unified abstractions. Operator representations are used to represent CML operators in tensor format. Extend computational graph (ECG) organizes operator representations in an optimization-friendly way and can be used to represent CML models.

(1) Operator Representation

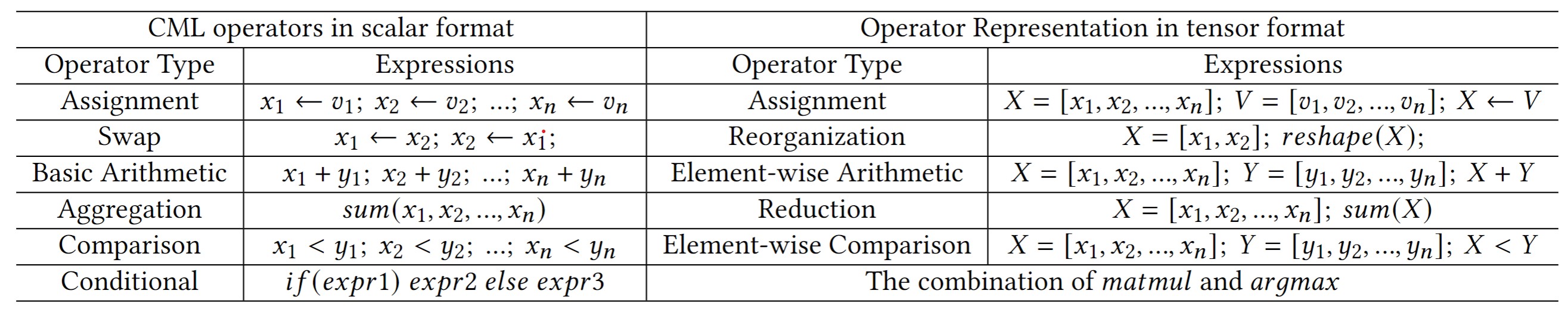

An operator representation uses a combination of one or more DL operators with tensors as input and output to represent a CML operator. CMLCompiler converts CML operators into DL operators and wrap them in the format of operator representations. Data in CML has mainly four formats: arrays, matrices, scalars, and tables. Matrices and arrays are regarded as two types of tensors whose operators can naturally be converted into DL operators. When CML models deal with tables, they take numeric data from tables and operate it, which can also be regarded as scalars. Hereby, CMLCompiler focus on the operators on scalars. As shown in Table 1, CMLCompiler classifies CML operators into six categories and provide operator representations, respectively.

Table 1: The summary of operator representation

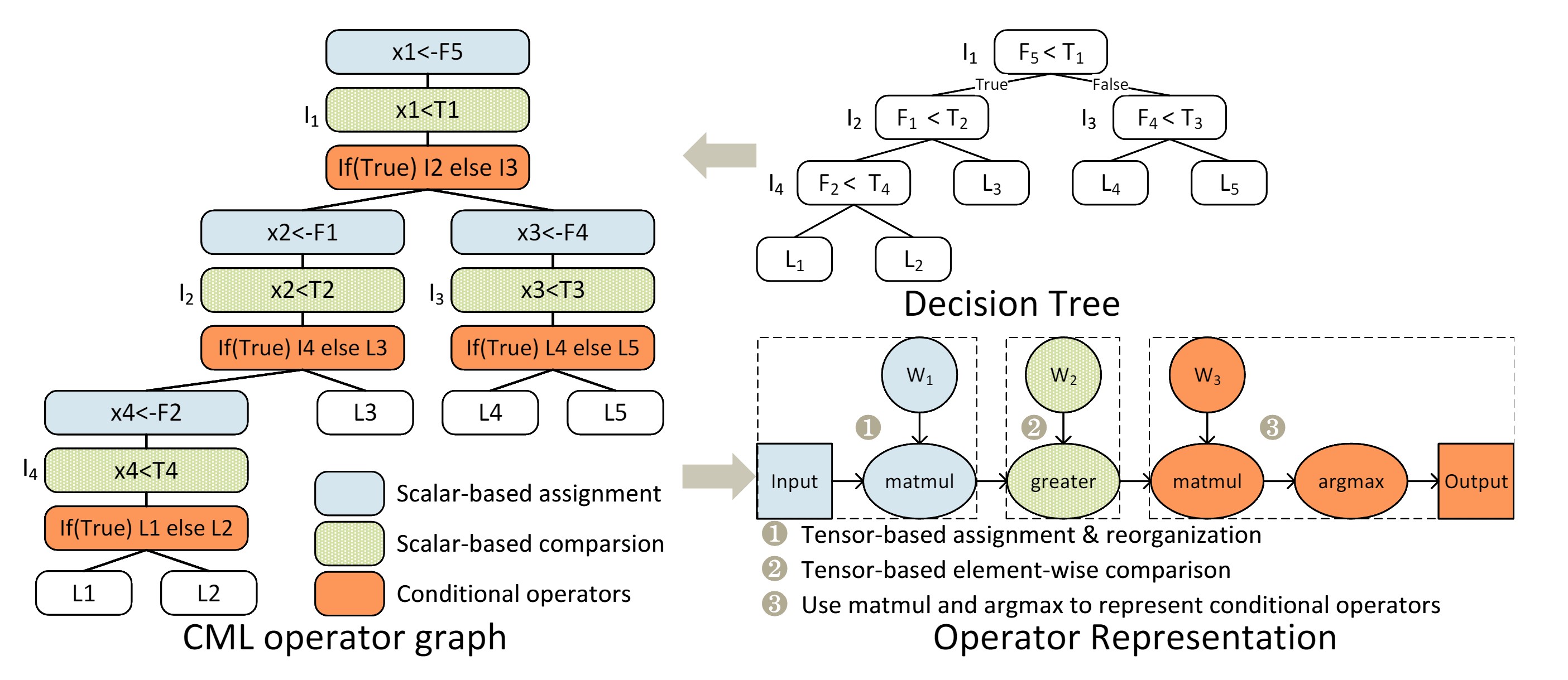

Fig.1 uses Decision Tree as an example to show the operator representations of confidential operators. The top-right shows the original decision tree. The left shows the corresponding CML operator graph. These scalar-based CML operators are converted to operator representations, as shown in the bottom-right in Fig. 1. Multi Scalar-based assignments are converted to one Tensor-based assignment. The data processing order differs from its layout in the tensor, we use the reorganization operator to change the tensor layout, which is replaced by matmul with 0-1 matrix. Multi Scalar-based comparisons are converted to one Tensor-based element-wise comparison. Sequential conditional operators are represented by the combination of matmul and argmax. Output returns the leaf nodes every sample reaches. Finally, a decision tree is converted to the combination of three operator representations.

Figure 1: Converting Decision Tree to operator representations.

(2) Extended Computational Graph

Extended computational graph (ECG) organizes operator representations in an optimization-friendly way and can be used to represent CML models. ECG is an extension based on DL computational graph, adding data type and sparsity as property. From the perspective of the DL frameworks and compilers, computational graphs are dense and float32 by default, such as neural network models. Using approximate optimizations like pruning and quantization brings sparse and low-precision data to all operators and weights. These optimizations cause a decrease in accuracy and bring extra computation, such as calibration. When we convert CML operators to operator representations, part of those converted operators and weights are sparse and low-precision naturally. Using DL computational graphs to represent CML models directly is not precise enough and ignores many optimization opportunities due to the data type and sparse features. So we extend the computational graph in the DL systems into extended computational graph (ECG) as the unified abstraction for CML models.

We classify the inputs of an operator into two categories: intermediate results and weights. Intermediate results are other operators' outputs and can only be handled during runtime. Input data is the first intermediate result in ECG, while output data is the last. Intermediate results are represented as {sparsity, dtype, tensor}. If we want to change the dtype of intermediate results, we should add dtype converting operator in the ECG. Weights are model parameters that can be loaded from trained models. Weights can be handled both during compilation and runtime, while a proper transformation during compilation can reduce runtime costs. Weights are represented as {sparsity, smallest_dtype, actual_dtype, tensor}. Smallest_dtype is the smallest dtype for weights without accuracy loss, actual_dtype is the dtype actually used. Smallest_dtype depends on the property of weights, while actual_dtype is fixed based on smallest_dtype and operators.

Operators are represented in the form of {weights, intermediate_results, use_sparse, type, dtype, DL_operator}. Weights and intermediate_results are inputs of operators. Use_sparse is a flag of whether using the sparse operator or not. Operator type is the type of operator. Operator dtype is the operators' data type, such as int8 matmul or float32 matmul. Operator dtype depends on the dtype of weights and intermediate_results. DL_operator is the native definition of operators in DL computational graphs, which we use to translate ECG to DL computational graphs.

CMLCompiler Framework

As shown in Fig. 2, CMLCompiler Framework includes four parts:Operator Converter, Model Parser, Graph Optimizer, and Graph Translator.

Figure 2: CMLCompiler Framework

(1) Operator Converter

Operator Converter traverses the operators in CML models and converts them into operator representations, respectively. Several assignment operators without data dependencies are converted to one tensor assignment. If the data processing order differs from its layout in the tensor, we use the reorganization operator to change the tensor layout. Basic arithmetic operators are converted to element-wise arithmetic operators. Aggregation operators are converted to reduction operators. Comparison operators are converted to element-wise comparison. Sequential conditional operators are represented as the combination of matmul and argmax. These converted DL operators are wrapped into operator representations with data dependencies.

(2) Model Parser

Model Parser organizes those operator representations in an optimization-friendly way and uses ECGs to represent CML models. Operators in an operator representation are initialized as nodes in an ECG, and edges are built between nodes according to data dependencies. When all operators are visited, the ECG is established.

(3) Graph Optimizer

Graph Optimizer performs graph-level optimizations, using a functionally equivalent transformation for ECGs. These optimizations are based on the features of CML models and do not influence accuracy. There are three specific graph rewriting optimizations: dtype rewriting, sparse operator replacing, and redundant elimination. Dtype rewriting uses low-precision computation with faster speed and less memory to replace high-precision computation. Sparse operator replacing replaces dense operators with sparse operations. Redundant elimination eliminates those operators who do not influence final results due to their mathematical properties.

(4) Graph Translator

Graph Translator converts the optimized ECG into DL computational graph based on ECG topology and chooses the proper operator implementation. DL frameworks or compilers provide different implementations for the same operator. Graph Translator utilizes the properties in ECG to choose the most proper implementation. Operator implementation can also be modulated based on hardware information. We can utilize hardware features to optimize operators.

Contributors

Xu Wen, ICT, Chinese Academy of Sciences

Dr. Wanling Gao, ICT, Chinese Academy of Sciences

Anzheng Li, ICT, Chinese Academy of Sciences

Dr. Zihan Jiang, Huawei

Dr. Lei Wang, ICT, Chinese Academy of Sciences

Prof. Jianfeng Zhan, ICT, Chinese Academy of Sciences, and BenchCouncil

License

CMLCompiler is open-source under the Apache License, Version 2.0. Please use all files in compliance with the License. If you want to use our CMLCompiler, you must understand and comply with the license. Software developed externally (not by CMLCompiler groups)

- Redistribution of source code must comply with the license and notice disclaimers

- Redistribution in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimers in the documentation and/or other materials provided by the distribution.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS “AS IS” AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE ICT CHINESE ACADEMY OF SCIENCES BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.