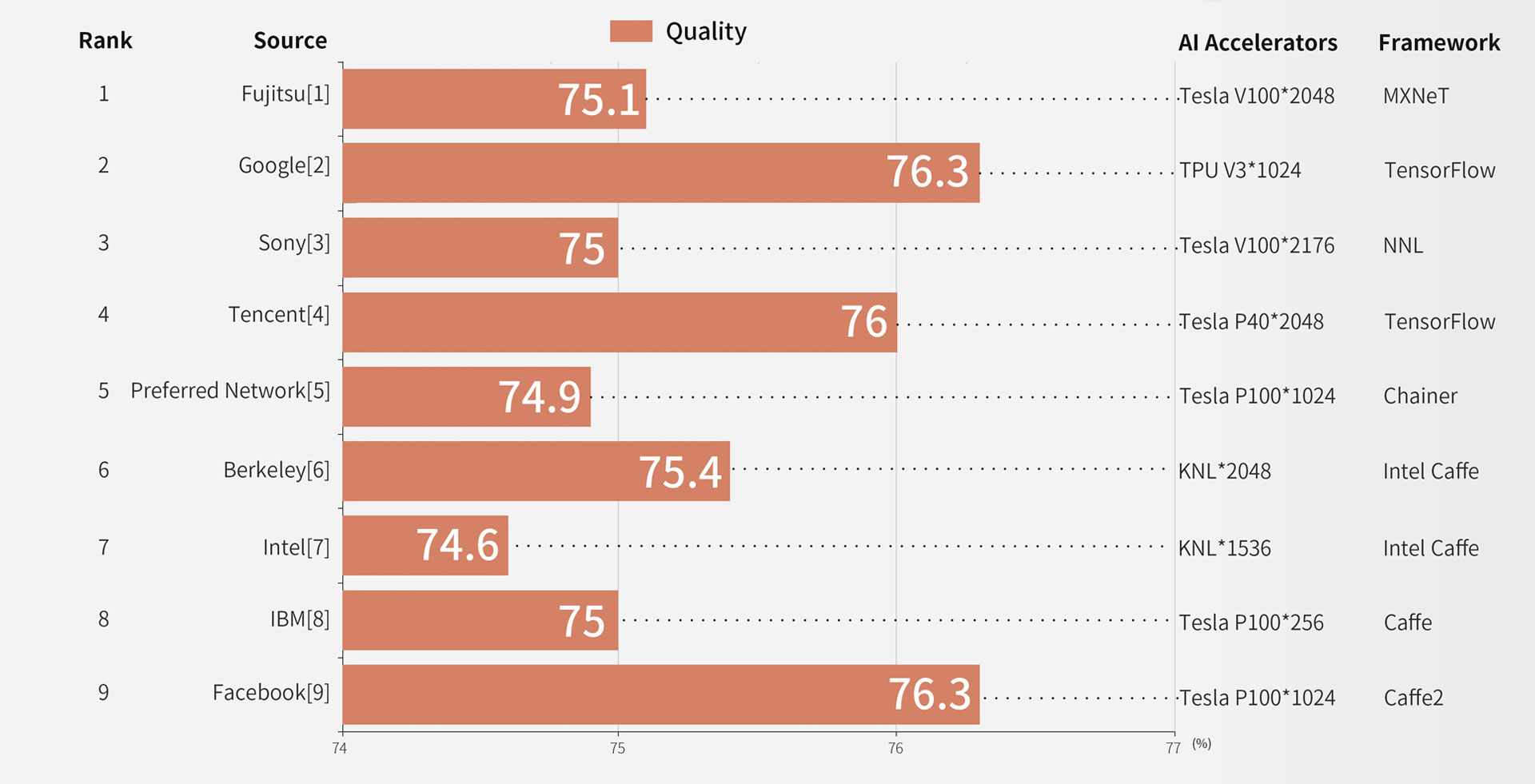

HPC AI500 Ranking

HPC AI500 Ranking, Image Classification, Free Level, July 2, 2020

The data from this list is colleced from the original paper and technical report and unverified.

- Valid FLOPS (VFLOPS) Metric. We provide a new metric---Valid FLOPS (VFLOPS), to consider both FLOPS and quality target of HPC AI. VFLOPS is caculated by the equation VFLOPS = FLOPS * (achieved_quality/target_quality)^n. Among them, achieved_quality represents the actual model quality achieved in the evaluation; target_quality is the state-of-the-art model quality that has been predefined in HPC AI500 benchmark. The value of n is apositive integer, which is used to define the sensitivity to the model quality. In image classfication, the target quality is 76.3% top1 accuracy and the value of n is 5 as default.

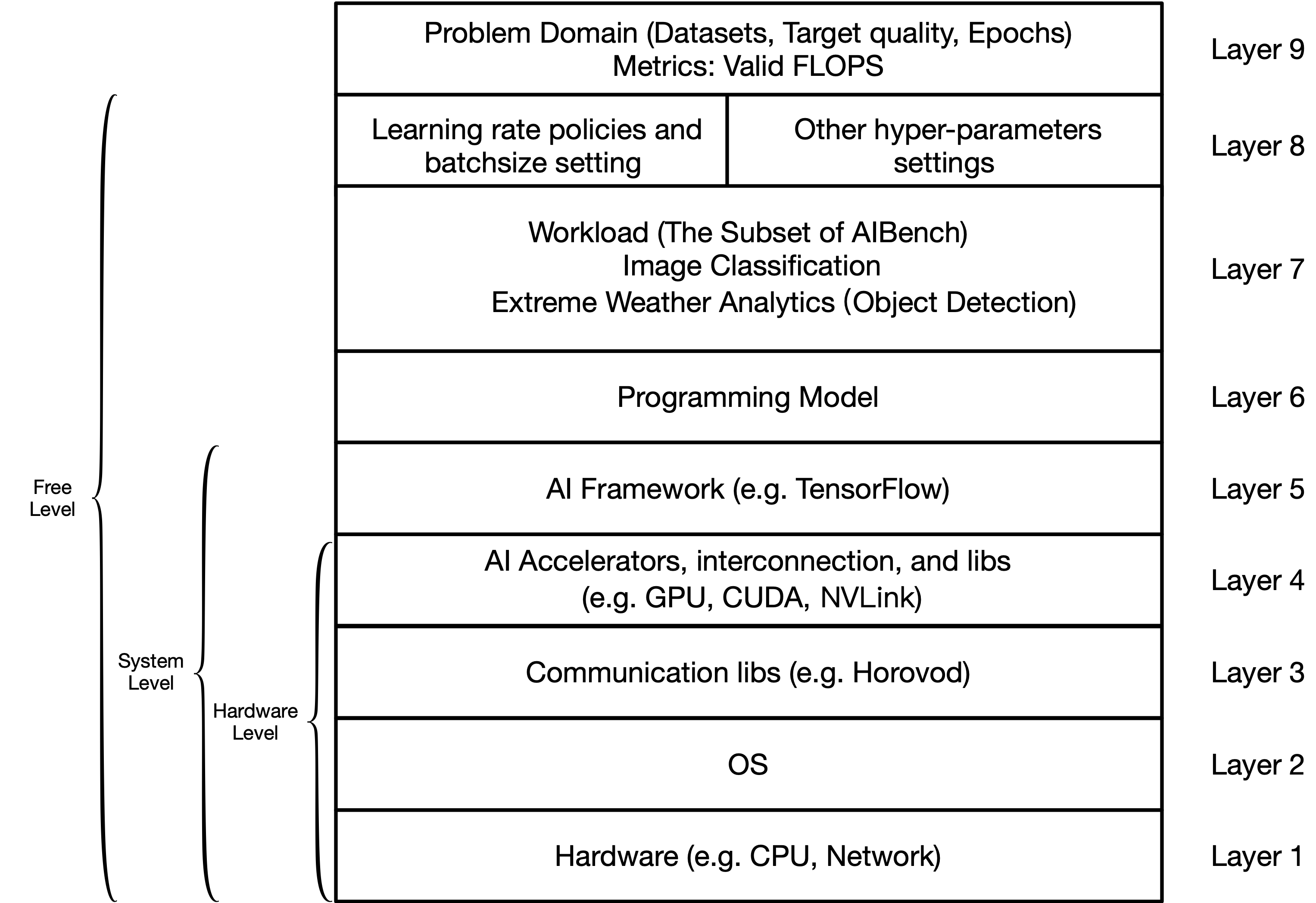

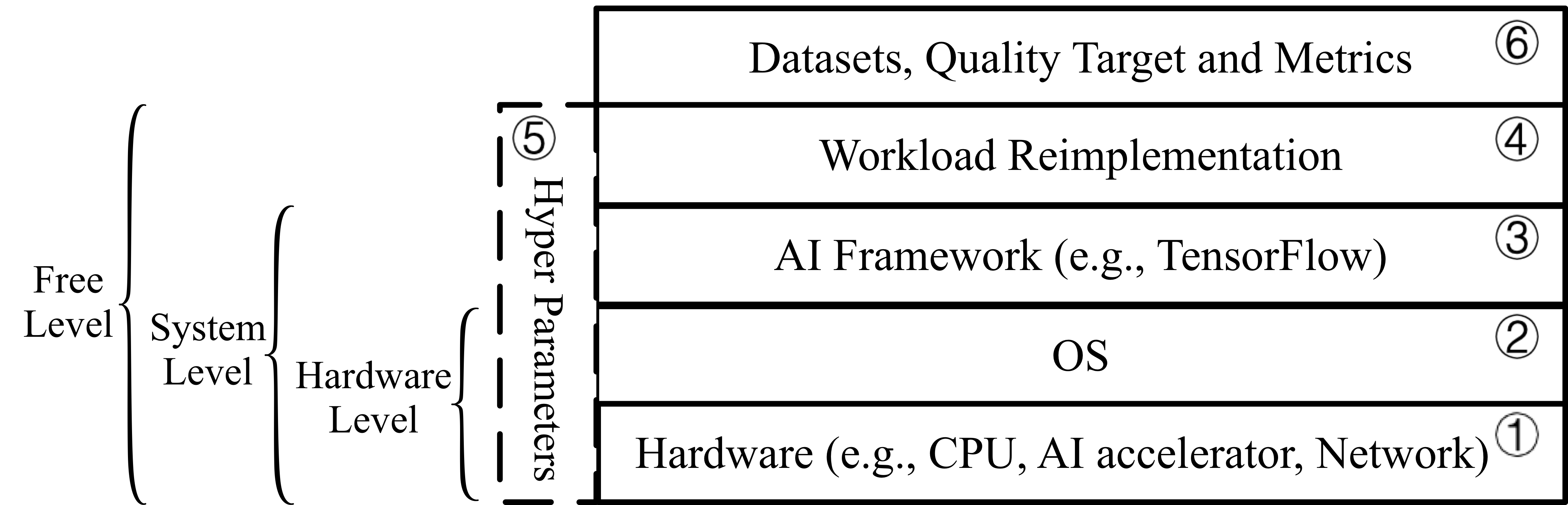

- Methodology. HPC AI500 benchmarking methodology provides three benchmarking levels, including free level, system level, and hardware level. The ranking list is evaluated using ImageNet/ResNet50, based on free level methodology. For Free level methodology, users can change any layers from Layer 1 to Layer 8 while keeping Layer 9 intact. The same data set, target quality, and training epochs are defined in Layer 9 while the other layers are open for optimizations.

Figure 1: The equivalent perspective of HPC AI500 Methodology.

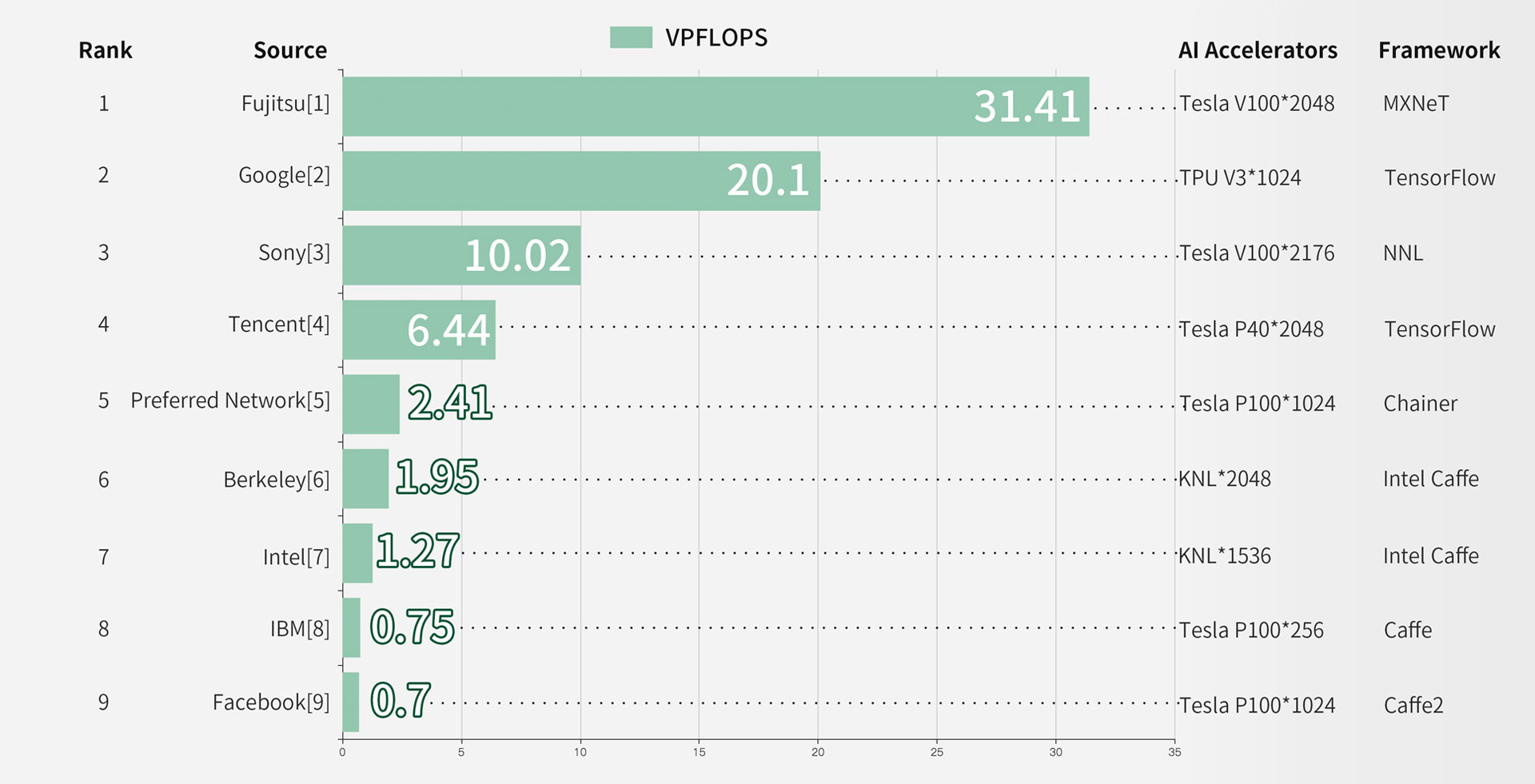

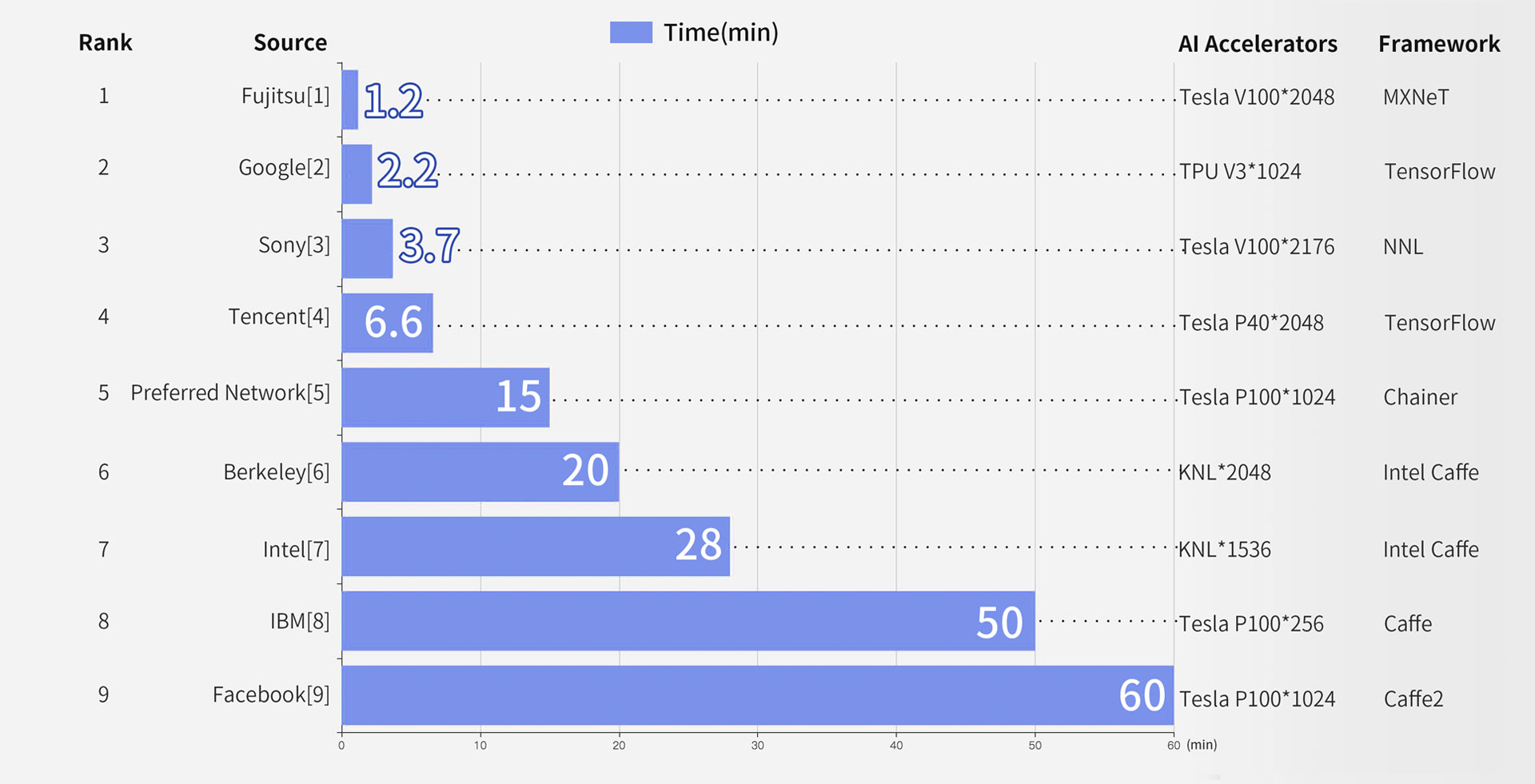

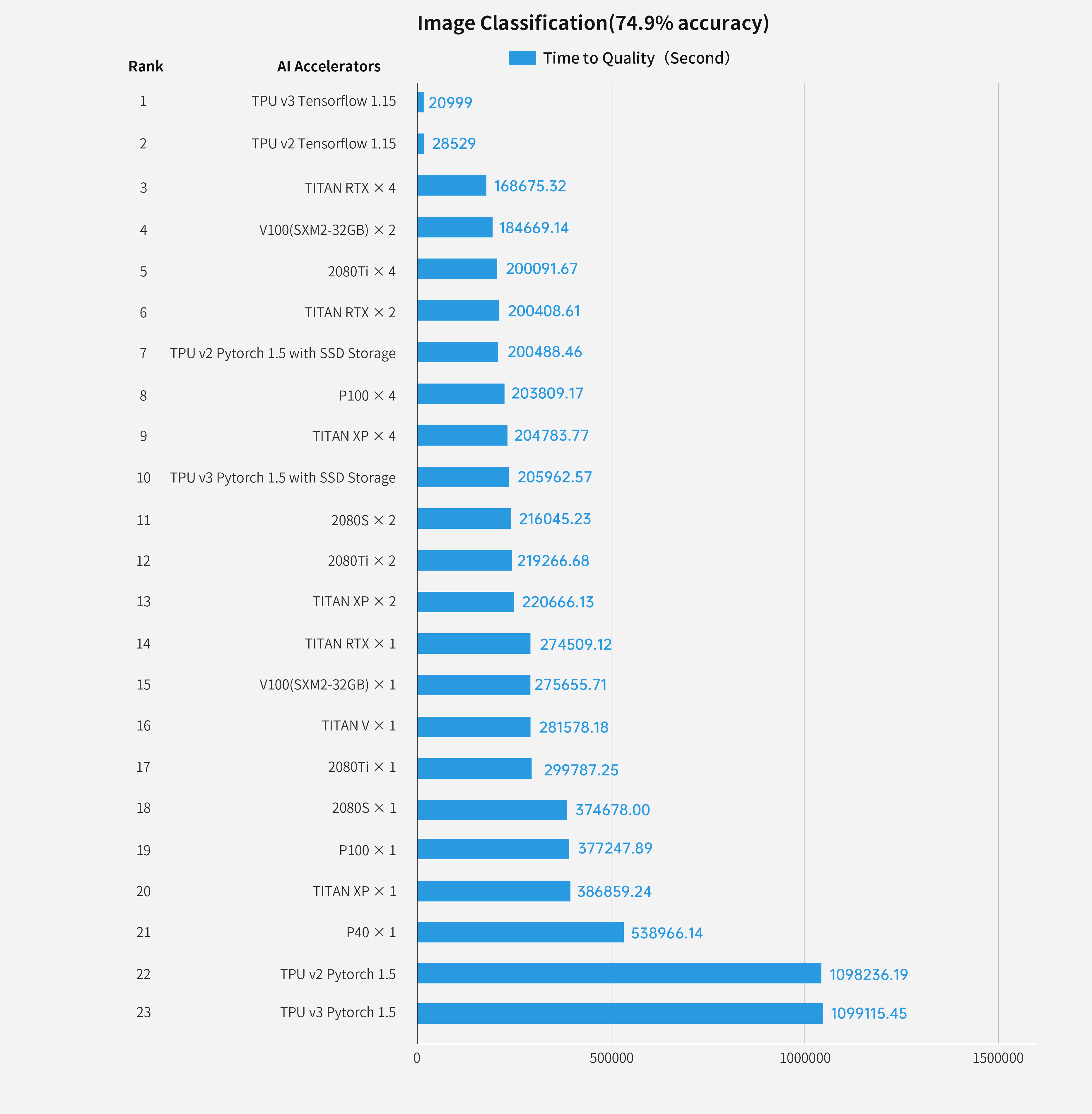

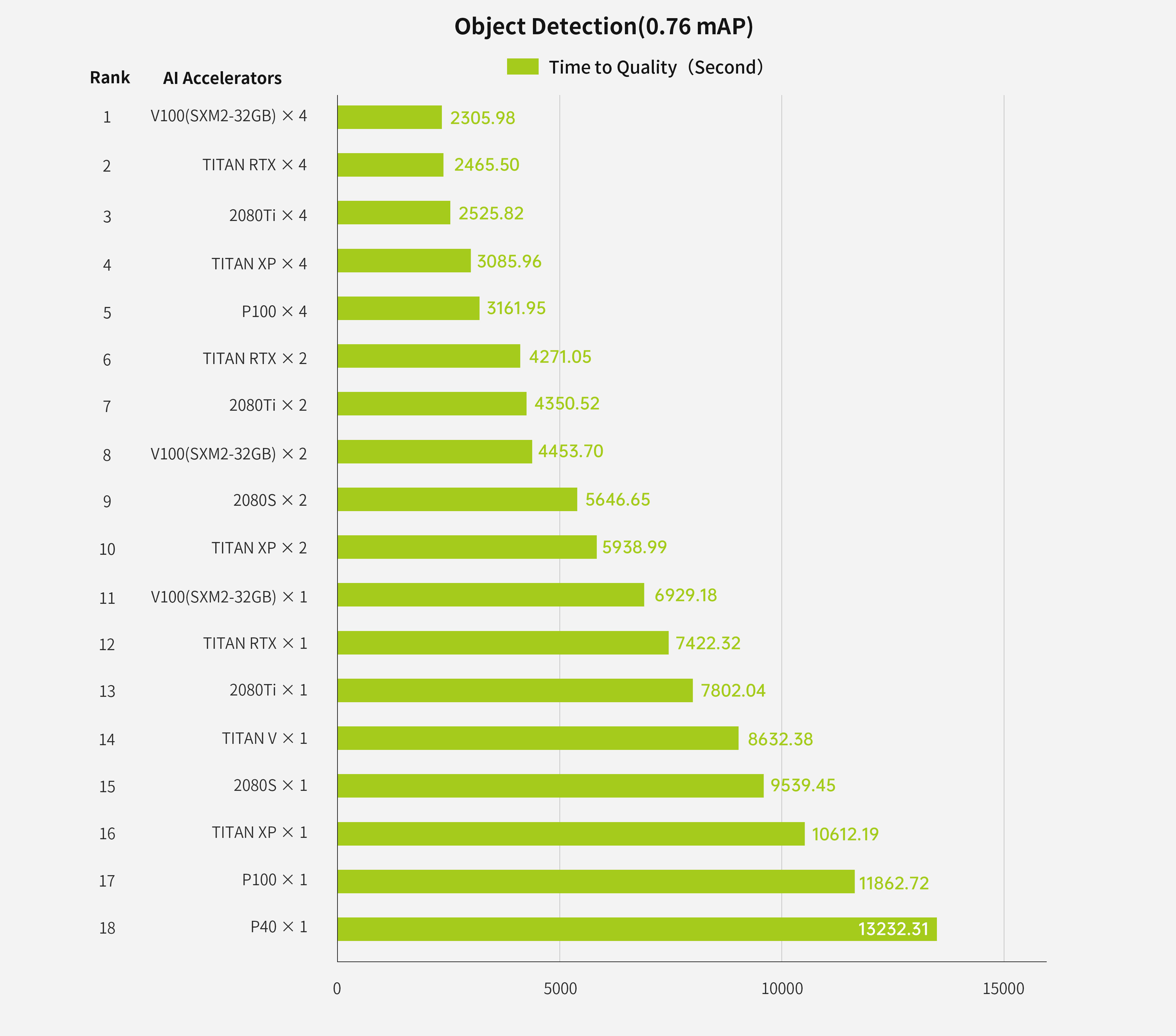

Datacenter AI Ranking

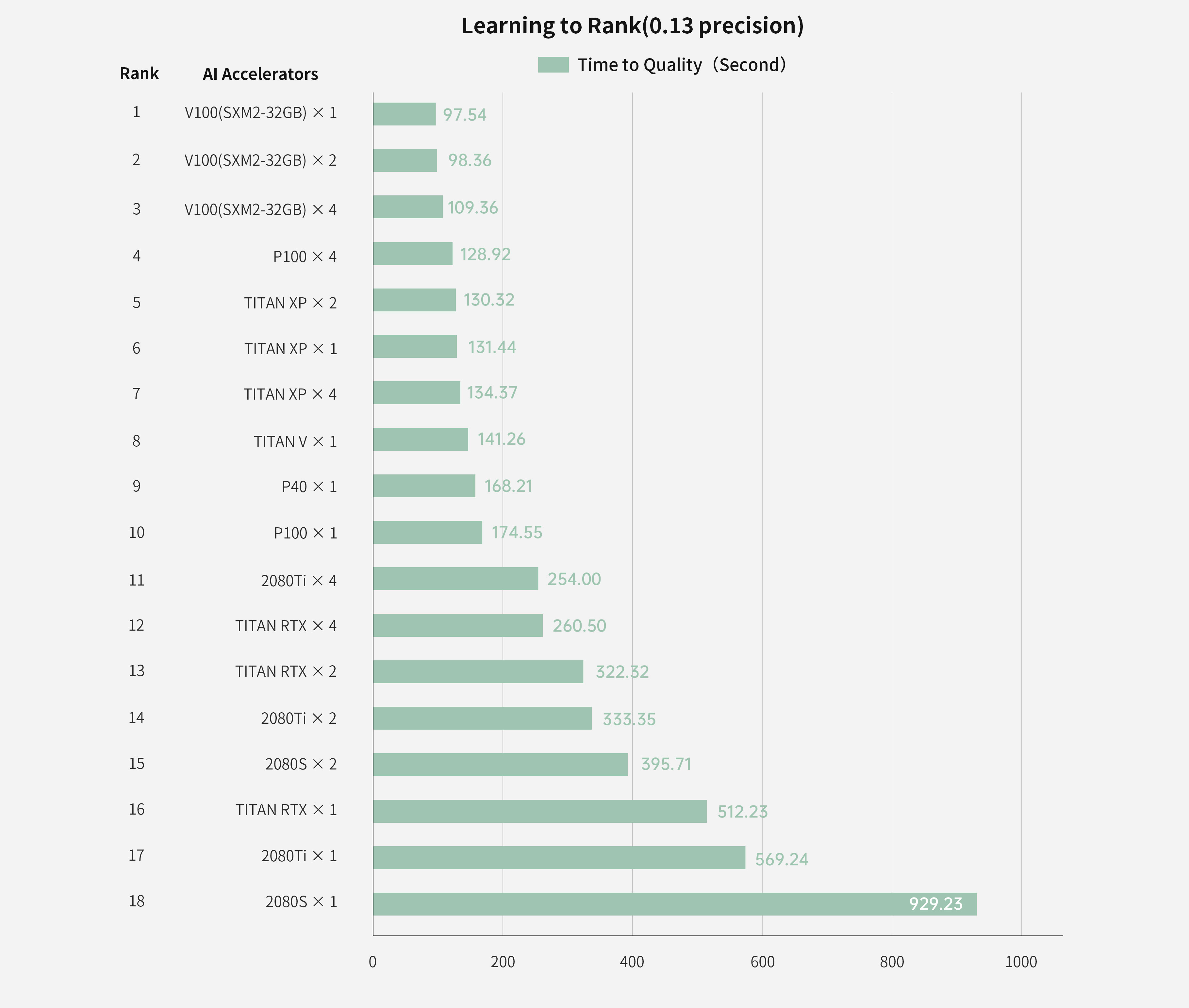

AIBench Training Ranking (Image Classification, Object Detection, Learning to Rank), System Level, September 8, 2020

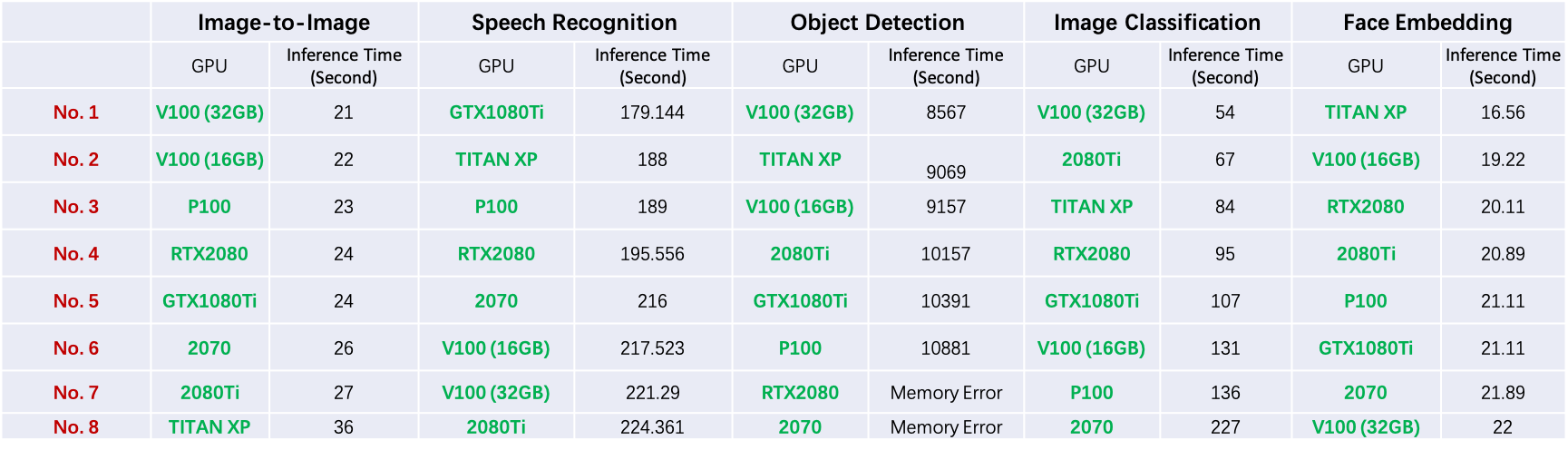

AIBench Inference Ranking (Image Classification, Image-to-Image, Speech Recognition, Object Detection, Image-to-Text, and Face Embedding), September 26, 2019

Single-GPU Inference

- Metrics. This ranking list uses time-to-quality as metric for training ranking, and uses inference time for single-GPU inference.

- Methodology. Datacenter AI Ranking uses AIBench---by far the most comprehensive and representative AI benchmark suite. The methodology is available from AIBench TR. We choose six benchmarks from AIBench to evaluate these eight GPUs, including image classification, Image-to-Image, speech recognition, object detection, Image-to-Text, and face embedding.

AIoT Ranking

AIoTBench Ranking, Image Classification, May 7, 2020

| Rank | Device | SoCs | Process | RAM | Android | AI Score | |

|---|---|---|---|---|---|---|---|

| VIPS | VOPS | ||||||

| 1 | Galaxy s10e | Snapdragon 855 | 7nm | 6GB | 9 | 140.40 | 151.19G |

| 2 | Honor v20 | Kirin 980 | 7nm | 8GB | 9 | 82.73 | 92.79G |

| 3 | Vivo nex | Snapdragon 710 | 10nm | 8GB | 9 | 45.11 | 48.05G |

| 4 | Vivo x27 | Snapdragon 710 | 10nm | 8GB | 9 | 44.61 | 47.87G |

| 5 | Oppo R17 | Snapdragon 670 | 10nm | 6GB | 8.1 | 33.40 | 34.15G |

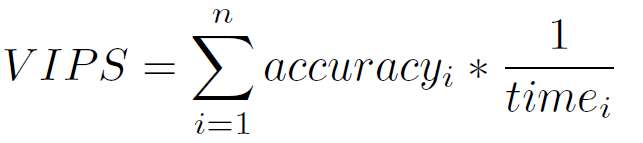

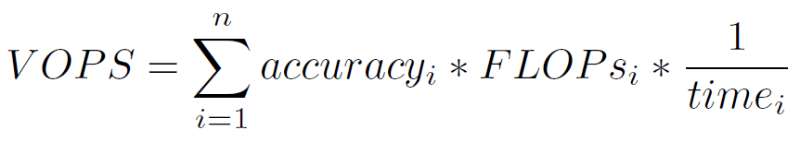

- Metrics. Two unified metrics are used as the AI scores: Valid Images Per Second (VIPS) and Valid FLOPs Per Second (VOPS). VIPS is a user-level or application level metric, since how many images can be processed is the end-user’s concern. VOPS is a system-level metric, and it reflects the valid computation that the system can process per second.

- Methodology. AIoTBench focuses on the task of image classification. The methodology is available from AIoTBench TR. A subset (5000 images) of ImageNet 2012 classification dataset is used in our benchmark. The tests include 6 models: ResNet50 (using re for short), InceptionV3 (in), DenseNet121 (de), SqueezeNet (sq), MobileNetV2 (mo), MnasNet (mn). For each model, we test the implementation of Pytorch Mobile (py), Caffe2 (ca), Tensorflow Lite with CPU (tfc), and Tensorflow Lite with NNAPI delegate (tfn).